There is a Spanish saying: A Guerra avisada, no matan soldado.

It’s more like saying warning on time can prevent a disaster.

Few of the things we should become aware of regarding AI; if we ever encounter a similar situation.

‘I’ve got your daughter’: Mom warns of terrifying AI voice cloning s c a m that faked kidnapping

Artificial intelligence (AI) is a set of technologies that enable computers to perform a variety of advanced functions, including the ability to see, understand and translate spoken and written language, analyze data, make recommendations, and more.

AI is the backbone of innovation in modern computing, unlocking value for individuals and businesses. For example, optical character recognition (OCR) uses AI to extract text and data from images and documents, turns unstructured content into business-ready structured data, and unlocks valuable insights.

Artificial intelligence defined

Artificial intelligence is a field of science concerned with building computers and machines that can reason, learn, and act in such a way that would normally require human intelligence or that involves data whose scale exceeds what humans can analyze.

AI is a broad field that encompasses many different disciplines, including computer science, data analytics and statistics, hardware and software engineering, linguistics, neuroscience, and even philosophy and psychology.

On an operational level for business use, AI is a set of technologies that are based primarily on machine learning and deep learning, used for data analytics, predictions and forecasting, object categorization, natural language processing, recommendations, intelligent data retrieval, and more.

By Cade Metz

Cade Metz writes about artificial intelligence and other emerging technologies.

Published May 1, 2023Updated May 7, 2023

In late March, more than 1,000 technology leaders, researchers and other pundits working in and around artificial intelligence signed an open letter warning that A.I. technologies present “profound risks to society and humanity.”

The group, which included Elon Musk, Tesla’s chief executive and the owner of Twitter, urged A.I. labs to halt development of their most powerful systems for six months so that they could better understand the dangers behind the technology.

“Powerful A.I. systems should be developed only once we are confident that their effects will be positive and their risks will be manageable,” the letter said.

The letter, which now has over 27,000 signatures, was brief. Its language was broad. And some of the names behind the letter seemed to have a conflicting relationship with A.I. Mr. Musk, for example, is building his own A.I. start-up, and he is one of the primary donors to the organization that wrote the letter

By pinpointing patterns in that text, L.L.M.s learn to generate text on their own, including blog posts, poems and computer programs. They can even carry on a conversation.

This technology can help computer programmers, writers and other workers generate ideas and do things more quickly. But Dr. Bengio and other experts also warned that L.L.M.s can learn unwanted and unexpected behaviors.

These systems can generate untruthful, biased technology/artificial-intelligence-bias and otherwise toxic information.*

Systems like GPT-4 get facts wrong and make up information, a phenomenon called “hallucination.”

Companies are working on these problems. But experts like Dr. Bengio worry that as researchers make these systems more powerful, they will introduce new risks.

The letter was written by a group from the Future of Life Institute, an organization dedicated to exploring existential risks to humanity. They warn that because A.I. systems often learn unexpected behavior from the vast amounts of data they analyze they could pose serious, unexpected problems.

They worry that as companies plug L.L.M.s into other internet services, these systems could gain unanticipated powers because they could write their own computer code. They say developers will create new risks if they allow powerful A.I. systems to run their own code.

In Puerto Rico, my Island, warning in the news to people about an AI that will call you ask a few questions and will copy your voice to try to use to purchase items and do many other stuff .

New AI-related scams clone voice of a loved one. Here’s what to look out for

Rockford Register Star

May 12, 2023·

You get a call saying a relative was in a car crash and is being held in jail unless you spend hundreds of dollars to get them out. Well, that relative is likely OK and it’s likely you’re being scammed.

It’s part of a new s c a m that the Federal Trade Commission said uses AI, or artificial intelligence to scam people.

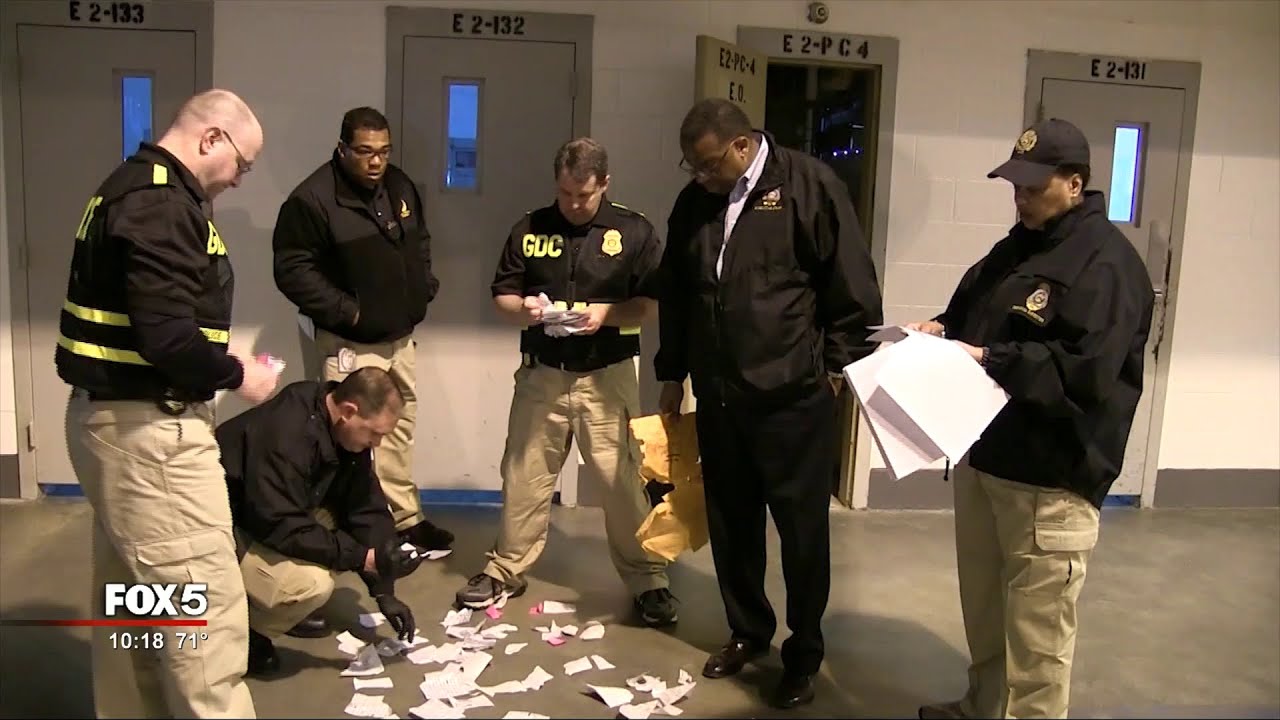

Police in Beloit, Wisconsin recently said a resident was victim of a scam that likely used AI.

Here’s what you need to know:

- What does the s c a m look like?

You’ll receive a call that will sound like a friend or family member who will say they’ve been kidnapped or were in a car crash and need money as soon as possible to be released.

*Why does it sound like I know the person when they call?

With the new AI technology, all scammers need is a video or audio clip of someone. Then, using the new technology, they’re able to clone the voice so when they call you, it sounds just like a loved one.

**How can I tell if the phone call is real?

The FTC recommends contacting the person who supposedly called you to verify their story. If the phone call came from a jail, you’re recommended to call the jail. If you can’t get a hold of the person, try reaching out to another family member first.

How else can I tell the call may be fake?

- Incoming calls come from an outside area code, sometimes from Puerto Rico with area codes (787), (939) and (856).

- Calls do not come from the alleged kidnapped victim’s phone.

- Callers go to great lengths to keep you on the phone.

- Callers prevent you from calling or locating the “kidnapped” victim.

**How will the scammers ask for payment?

They’ll ask for payments that are hard for you to get back, such as a wiring money or gift cards.